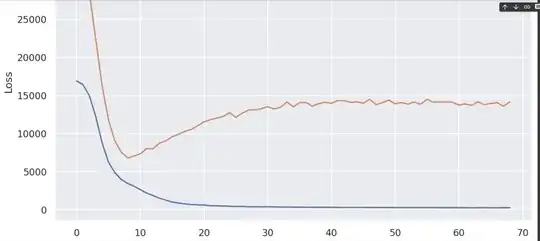

I've created an LSTM model to predict 1 output value from 8 features. My loss constantly decreases and my val loss also decreases from the start, however it begins to increase after so many epochs. Here's a picture of what's going on.

Also here is my code:

file = r'/content/drive/MyDrive/only force/only_force_pt1.csv'

df = pd.read_csv(file)

df.head()

X = df.iloc[:,1:9]

y = df.iloc[:,9]

#X.head()

print(type(X))

WIN_LEN = 5

def window_size(size, inputdata, targetdata):

X = []

y = []

i = 0

while(i + size) <= len(inputdata) - 1:

X.append(inputdata[i: i + size])

y.append(targetdata[i + size])

i += 1

assert len(X) == len(y)

return (X, y)

X_series, y_series = window_size(WIN_LEN, X, y)

data_split = int(len(X_series)*0.8)

X_train, X_test = X_series[:data_split], X_series[data_split:]

y_train, y_test = y_series[:data_split], y_series[data_split:]

n_timesteps, n_features, n_outputs = np.array(X_train).shape[1], np.array(X_train).shape[2], 1

X_train = np.array(X_train)

X_test = np.array(X_test)

y_train = np.array(y_train)

y_test = np.array(y_test)

[verbose, epochs, batch_size] = [1, 500, 32]

input_shape = (n_timesteps, n_features)

model = Sequential()

model.add(LSTM(64,input_shape = input_shape,return_sequences=False))

model.add(Dropout(0.2))

model.add(Dense(32, activation = 'relu', kernel_regularizer=keras.regularizers.l2(0.001)))

model.add(Dropout(0.2))

model.add(Dense(32, activation = 'relu', kernel_regularizer=keras.regularizers.l2(0.001)))

model.add(Dense(1))

earlystopper = EarlyStopping(monitor='val_loss', min_delta=0, patience = 60, verbose =1, mode = 'auto')

model.summary()

model.compile(loss = 'mse', optimizer = Adam(learning_rate = 0.00005), metrics = [tf.keras.metrics.RootMeanSquaredError()])

history = model.fit(X_train, y_train, batch_size = batch_size, epochs=epochs, verbose=verbose, validation_data=(X_test, y_test), callbacks = [earlystopper],shuffle = True)

I do get much better results when I use train_test_split and shuffle my training and testing data, however that leads to major overfitting problems. Also, I'm using time series data, so I don't want to shuffle anyways.

Does anyone have any suggestions?