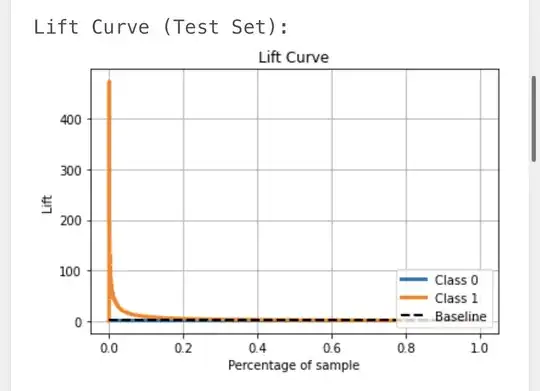

I am building a binary classification model using GB Classifier for imbalanced data with event rate 0.11% having sample size of 350000 records (split into 70% training & 30% testing).

I have successfully tuned hyperparameters using GridsearchCV, and have confirmed my final model for evaluation.

Results are:

Train Data-

[[244741 2]

[ 234 23]]

precision recall f1-score support

0 1.00 1.00 1.00 244743

1 0.92 0.09 0.16 257

accuracy - - 1.00 245000

macro avg 0.96 0.54 0.58 245000

weighted avg 1.00 1.00 1.00 245000

test data -

[[104873 4]

[ 121 2]]

precision recall f1-score support

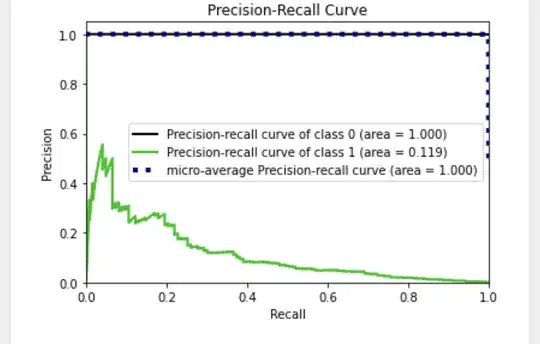

0 1.00 1.00 1.00 104877

1 0.33 0.02 0.03 123

accuracy - - 1.00 105000

macro avg 0.67 0.51 0.52 105000

weighted avg 1.00 1.00 1.00 105000

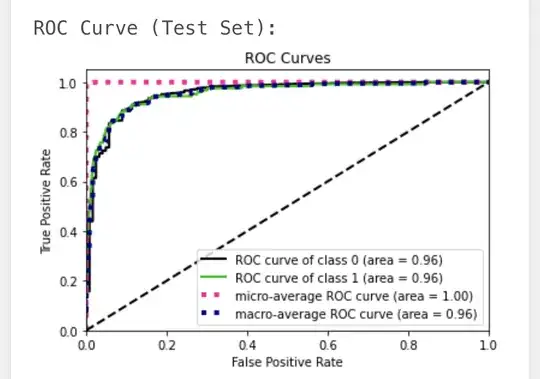

AUC for both class 1 & 0 is 0.96

I an not sure if this is a good model I can use for predicting probability of occurrence.

Please guide.