I'm working on a dataset that isn't split into test and train set by default and I'm a bit concerned about the imbalance between the 'label' distributions between them and how they might affect the trained model's performance. Let me note that I use deep neural networks and the prediction type is regression.

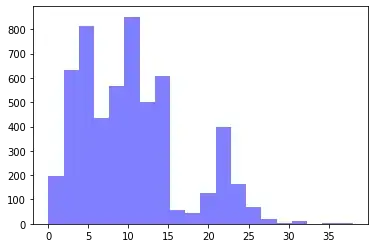

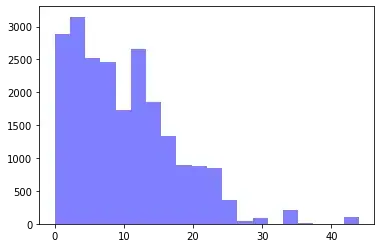

By sequentially splitting the samples into test/train (20/80) I get the following distributions respectively.

I'm worried, since model performance is not improving by tuning hyperparamaters, if I'm generally allowed to try random seeds for shuffling my dataset till test/train label distributions look alike. Are there any references/best practices for that? I'm not concerned about compromising the ability to generalize/overfitting since I don't base my splitting into the form of the input data, rather the predicted outcome.