I have been developing a chess program which makes use of alpha-beta pruning algorithm and an evaluation function that evaluates positions using the following features namely material, kingsafety, mobility, pawn-structure and trapped pieces etc..... My evaluation function is derived from the

$$f(p) = w_1 \cdot \text{material} + w_2 \cdot \text{kingsafety} + w_3 \cdot \text{mobility} + w_4 \cdot \text{pawn-structure} + w_5 \cdot \text{trapped pieces}$$

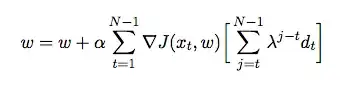

where $w$ is the weight assigned to each feature. At this point i want to tune the weights of my evaluation function using temporal difference, where the agent plays against itself and in the process gather training data from its environment (which is a form of reinforcement learning). I have read some books and articles in order to have an insight on how to implement this in Java but they seem to be theoretical rather than practical. I need a detailed explanation and pseudo codes on how to automatically tune the weights of my evaluation function based on previous games.