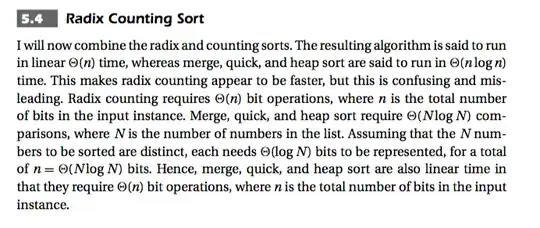

This is an excerpt from the algorithms textbook How to Think About Algorithms by Jeff Edmonds (This book is a gem by the way).

I get his conclusion about Merge/Quick/Heap sorts having $O(NlogN)$ operations with respect to $N$, the number of elements in the input list, and at the same time they are linear in the context that they need $O(n)$ opertaions with respect to the number of bits needed to represent the input list. Different models of computation and a good way to describe one algorithm under different models.

But my question is in the line

Assuming that the $N$ numbers to be sorted are distinct, each needs $logN$ bits to be represented, for a total of $n = \Theta(NlogN)$ bits.

My understanding of this was that with $N$ distinct numbers, we need word size $w = \theta(logN)$, as defined in CLRS. We want the word size to be able to at least index into $N$ different elements but not so big that we can put everything in one word. Also, with $N$ distinct elements, we need $\Omega(logN)$ bits to represent the largest number. Assuming each word will fit in $w$, Edmonds' claim that $n = \theta(NlogN)$ bits made sense. Please correct me if my analysis is wrong up to here.

But when I try to apply this to counting sort, something doesn't seem right. With $N$ again the number of elements in the input and $k$ the value of the maximum element in the list, the running time is $O(N + k)$. Counting sort is linear with respect to $N$, the number of elements in the input, when $k = O(N)$. Using this constraint to represent the input as the total number of bits $n$, I think $n = O(Nlogk) = O(NlogN)$.

So with in the RAM model of computation, how can I express the run-time of counting sort with respect to $n$ bits? Merge/quick/heap sorts had time complexity $O(n)$ with respect to $n$ bits, as was expressed cleanly by Edmonds. I am not sure how to do something similar for counting sort, and maybe possibly for radix sort using counting sort as a subroutine. Any idea how to do this in the two cases when $k = \Theta(N)$ and when this condition is not present? I am suspecting the former will give some kind of polynomial time with respect to $n$ and the latter exponential (hence pseudo-polynomial) time with respect to $n$ bits, but have trouble expressing the mathematics...