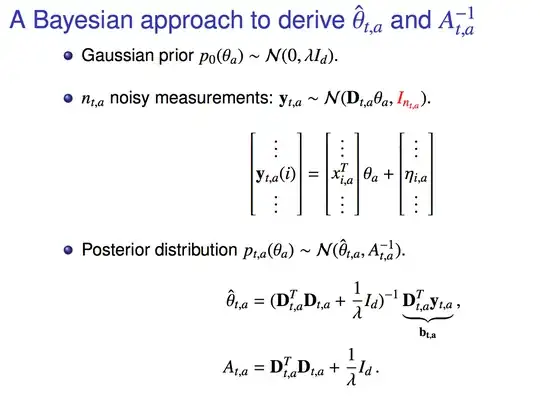

Prior:

$$p_0(\theta_a) \varpropto \text{exp}(-\frac{1}{2}\theta_{a}^{T} (\lambda I_d)^{-1} \theta_a) = \text{exp}(-\frac{1}{2} \frac{1}{\lambda}\theta_{a}^{T} \theta_a)$$

MLE:

\begin{align*}

p(y_{t,a} | \theta_a) &= (2 \pi)^{\frac{n_{t,a}}{2}} |\sum|^{\frac{1}{2}} \text{exp}(-\frac{1}{2} (y_{t,a} - D_{t,a} \theta_{a})^{T} I_{n_{t,a}}^{-1} (y_{t,a} - D_{t,a} \theta_a)) \\

& \varpropto \text{exp}(-\frac{1}{2}(y_{t,a} - D_{t,a} \theta_{a})^{T} I_{n_{t,a}}^{-1} (y_{t,a} - D_{t,a} \theta_a))

\end{align*}

Hence,

\begin{align*}

p_{t,a}(\theta_a) &\varpropto \text{exp}(-\frac{1}{2} \frac{1}{\lambda} \theta_{a}^{T} \theta_a) ~ \text{exp}(-\frac{1}{2}(y_{t,a} - D_{t,a} \theta_{a})^{T} I_{n_{t,a}}^{-1} (y_{t,a} - D_{t,a} \theta_a)) \\

&\varpropto \text{exp}(-\frac{1}{2} (\frac{1}{\lambda} \theta_{a}^{T} \theta_a + y_{t,a}^{T} y_{t,a} - y_{t,a}^{T} D_{t,a} \theta_a - \theta_{a}^{T} D_{t,a}^{T} y_{t,a} + \theta_{a}^{T} D_{t,a}^{T} D_{t,a} \theta_a))

\end{align*}

By definition, we also have

\begin{align*}

p_{t,a}(\theta_a) &\varpropto \text{exp}(-\frac{1}{2}(\theta_a - \hat{\theta_{a}})^{T} A_{t,a} (\theta_a - \hat{\theta_a})) \\

&\varpropto \text{exp}(-\frac{1}{2}(\theta_a^T A_{t,a} \theta_a - \theta_a^T A_{t,a} \hat{\theta_a} - \hat{\theta_a}^T A_{t,a} \theta_a + \hat{\theta}^T A_{t,a} \hat{\theta}))

\end{align*}

And so we have

\begin{align*}

\text{exp}(-\frac{1}{2} (\frac{1}{\lambda} \theta_{a}^{T} \theta_a + y_{t,a}^{T} y_{t,a} - y_{t,a}^{T} D_{t,a} \theta_a - \theta_{a}^{T} D_{t,a}^{T} y_{t,a} + \theta_{a}^{T} D_{t,a}^{T} D_{t,a} \theta_a)) &= \text{exp}(-\frac{1}{2}(\theta_a^T A_{t,a} \theta_a - \theta_a^T A_{t,a} \hat{\theta_a} - \hat{\theta_a}^T A_{t,a} \theta_a + \hat{\theta}^T A_{t,a} \hat{\theta})) \\

\frac{1}{\lambda} \theta_{a}^{T} \theta_a + y_{t,a}^{T} y_{t,a} - y_{t,a}^{T} D_{t,a} \theta_a - \theta_{a}^{T} D_{t,a}^{T} y_{t,a} + \theta_{a}^{T} D_{t,a}^{T} D_{t,a} \theta_a &= \theta_a^T A_{t,a} \theta_a - \theta_a^T A_{t,a} \hat{\theta_a} - \hat{\theta_a}^T A_{t,a} \theta_a + \hat{\theta}^T A_{t,a} \hat{\theta}

\end{align*}

Equating the "second coefficient" of $\theta_a$

$$\theta_a^{T} (\frac{1}{\lambda} I_d)\theta_a + \theta_a^T D_{t,a}^T D_{t,a} \theta_a = \theta_a^T A_{t,a} \theta_a$$

Hence $A_{t,a} = D_{t,a}^T D_{t,a} + \frac{1}{\lambda} I_d$,

Similarly, equating the "first coefficient" of $\theta_a$, we have $$\hat{\theta_a}^T = A_{t,a}^{-1} b_{t,a}$$