An alternative way of thinking about this is what the maximum value of i becomes before it is reset. This, as it turns out, makes it more straightforward to reason about how the prior sort order of A affects the run time of the algorithm.

In particular, observe that when i sets its new maximal value, let's call it N, the array [A[0], ..., A[N-1]] is sorted in ascending order.

So what happens when we add the element A[N] to the mix?

The mathematics:

Well, lets say it fits at position $p_N$. Then we need $N$ loop iterations (which I'll denote $\text{steps}$) to move it to place $N-1$, $N + (N-1)$ iterations to move it to place $N-2$, and in general:

$$\text{steps}_N(p_N) = N + (N-1) + (N-2) + \dots + (p_N+1) = \tfrac{1}{2}(N(N+1) - p_N(p_N+1))$$

For a randomly sorted array, $p_N$ takes the uniform distribution on $\{0, 1,\dots, N\}$ for each $N$, with:

$$\mathbb{E}(\text{steps}_N(p_N)) = \sum_{a=1}^{N} \mathbb{P}(p_N = a)\text{steps}_N(a) = \sum_{a=1}^{N}\tfrac{1}{N}\tfrac{1}{2}(N(N+1) - a(a+1)) = \tfrac{1}{2} ( N(N+1) - \tfrac{1}{3}(N+1)(N+2)) = \tfrac{1}{3} (N^2-1) = \Theta(N^2)$$

the sum can be shown using Faulhaber's formula or the Wolfram Alpha link at the bottom.

For an inversely sorted array, $p_N=0$ for all $N$, and we get:

$$\text{steps}_N(p_N) = \tfrac{1}{2}N(N+1)$$

exactly, taking strictly longer than any other value of $p_N$.

For an already sorted array, $p_N = N$ and $\text{steps}_N(p_N) = 0$, with the lower-order terms becoming relevant.

Total time:

To get the total time, we sum up the steps over all the $N$. (If we were being super careful, we would sum up the swaps as well as the loop iterations, and take care of the start and end conditions, but it is reasonably easy to see they don't contribute to the complexity in most cases).

And again, using linearity of expectation and Faulhaber's Formula:

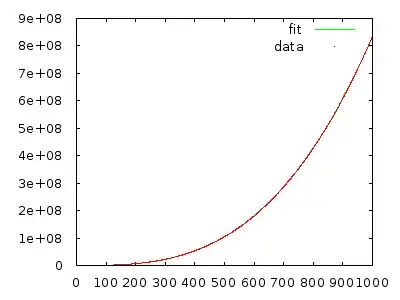

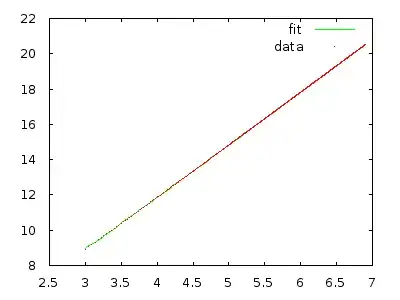

$$\text{Expected Total Steps} = \mathbb{E}(\sum_{N=1}^n \text{steps}_N(p_N)) = \sum_{N=1}^n \mathbb{E}(\text{steps}_N(p_N)) = \Theta(n^3)$$

Of course, if for some reason $\text{steps}_N(p_N)$ is not $\Theta(N^2)$ (eg the distribution of arrays we're looking at are already very close to being sorted), then this need not always be the case. But it takes very specific distributions on $p_N$ to achieve this!

Relevant reading: