Edit Jan 31: important special case is when the sums form a nested structure, search for "Hasse diagram is a tree" below

Here's a practically relevant variation on matrix chain problem:

Find optimal way to compute a sum over all weighted paths in a graph, where the weight of each path is the matrix product of edge labels (ie, a matrix chain)

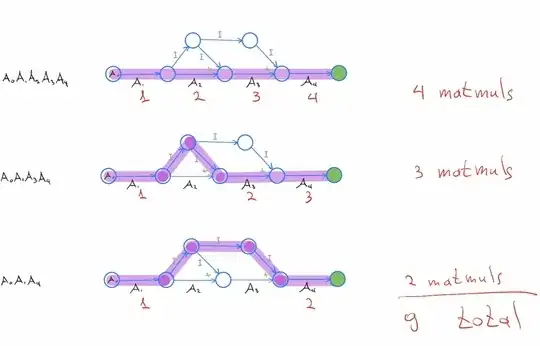

For instance, take $Q$ corresponding to the sum of $$Q=A_0 A_1 A_2 A_3 A_4 +A_0 A_1 A_3 A_4 +A_0 A_1 A_4$$

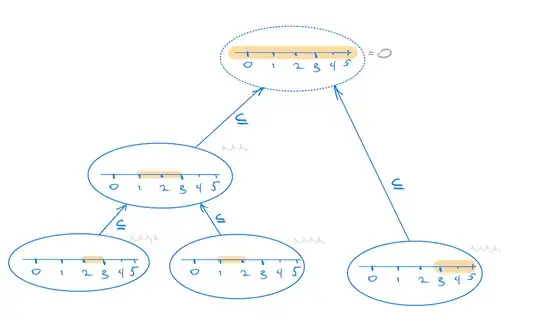

We can represent it as the following sum over weighted paths

Now, we can count the number of matrix multiplications (in red) involved in computing this sum

Here's a more efficient way to compute $Q$

$$Q=A_0A_1(A_2+I)A_3A_4 + A_0A_1A_4$$

We can view it as following sum over paths:

Some edges are labeled with I, corresponding to multiplication by the identity matrix.

Given a list of matrix dimensions $d_0,\ldots,d_n$ corresponding to matrices $A_0,\ldots,A_{n-1}$ and a list of paths, the task is to figure out a sequence of matrix multiplications and additions which produces $Q$ using the smallest total number of scalar multiplications.

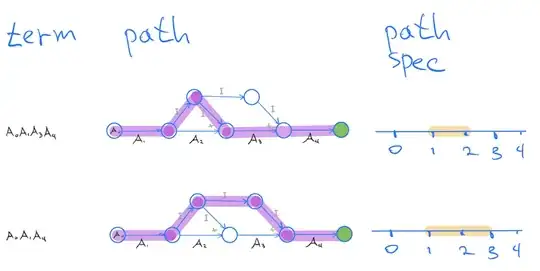

Specifying Paths

Each term in the sum is specified as a pair of two numbers $(i,j)$, indicating that matrices $A_{i+1},A_{i+2},\ldots,A_j$ are not present in the term.

For instance, for problem above, paths are $[(), (1,2), (1,3)]$. The second term is missing matrices $\{A_2\}$ and the third term is missing $\{A_2,A_3\}$. Viewing paths as connected subgraphs of the chain graph, paths are partially ordered using subgraph relation. Therefore, the set of paths forms a lattice.

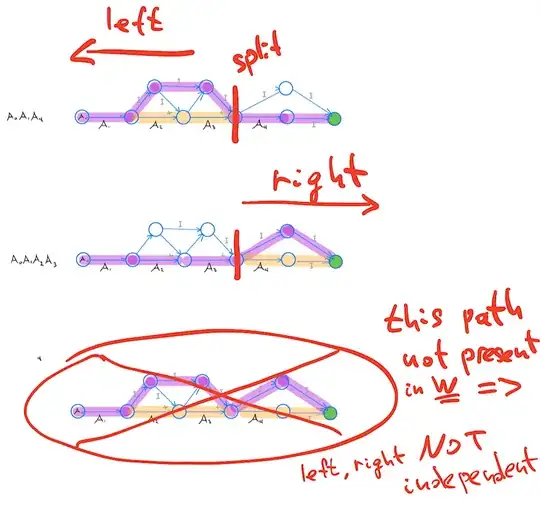

An important special case is when the Hasse diagram is a tree.

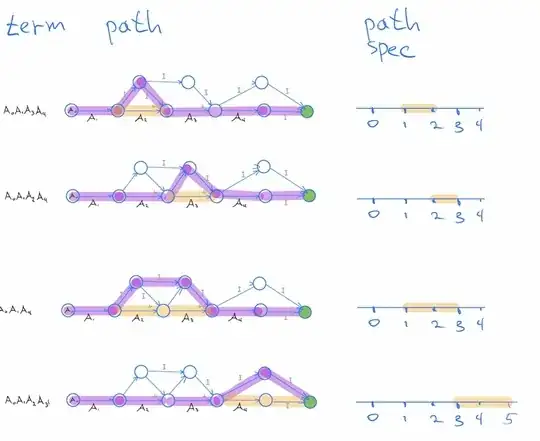

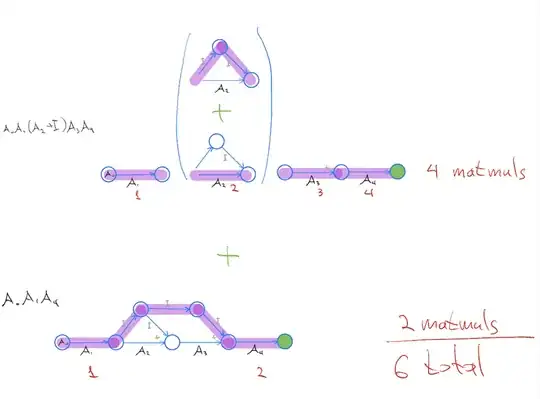

For example, consider this sum.

$$W=A_0 A_1 A_3 A_4 + A_0 A_1 A_2 A_4 + A_0 A_1 A_4 + A_0 A_1 A_2 A_3$$

And the corresponding Hasse diagram:

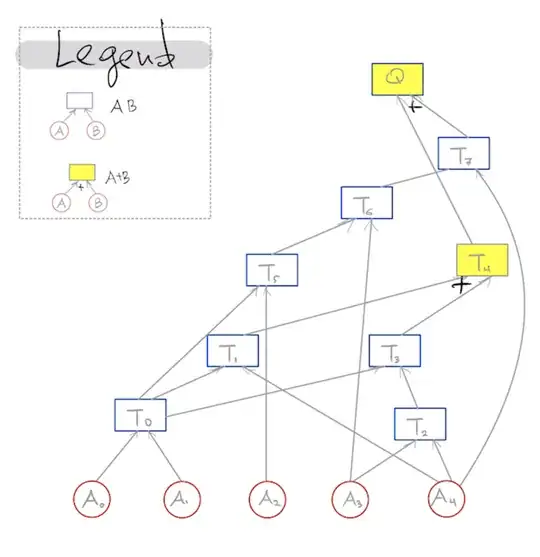

Coming back to original example $Q$, we can order matrix products in the following way: $$Q=(A_0 A_1) A_4 + (A_0 A_1) (A_3 A_4) + ((A_0 A_1) A_2) (A_3 A_4)$$

Notice that some terms like $A_0 A_1$ are repeated, hence reuse this computation by introducing temporary variables like $T_0=A_0 A_1$.

Now we can visualize a particular schedule below, and use this colab to find that it requires 7 scalar multiplications when $d_0=d_1=\ldots=1$

T0=matmul(A0, A1)

T1=matmul(T0,A4)

T2=matmul(A3,A4)

T3=matmul(T0,T2)

T4=add(T1,T3)

T5=matmul(T0,A2)

T6=matmul(T5,A3)

T7=matmul(T6,A4)

Q=add(T7,T4)

Now the question is -- how hard is this problem to solve or approximate:

- when $d_0=d_1=\ldots=d_n=1$

- when $d_i$'s are arbitrary positive integers

Edit Feb 17

Example of problematic split mentioned in the comments