The asymptotic cost, or $\mathcal O$-notation, describes the limiting behaviour of a function as its argument tends to infinity, i.e. its growth rate.

The function itself, e.g. the number of comparisons and/or swaps, may be different for two algorithms with the same asymptotic cost, provided they grow with the same rate.

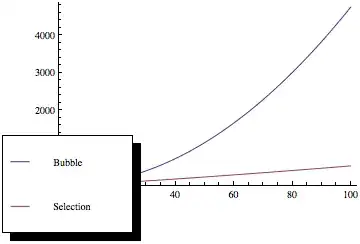

More specifically, Bubble sort requires, on average, $n/4$ swaps per entry (each entry is moved element-wise from its initial position to its final position, and each swap involves two entries), while Selection sort requires only $1$ (once the minimum/maximum has been found, it is swapped once to the end of the array).

In terms of the number of comparisons, Bubble sort requires $k\times n$ comparisons, where $k$ is the maximum distance between an entry's initial position and its final position, which is usually larger than $n/2$ for uniformly distributed initial values. Selection sort, however, always requires $(n-1)\times(n-2)/2$ comparisons.

In summary, the asymptotic limit gives you a good feel for how the costs of an algorithm grow with respect to the input size, but says nothing about the relative performance of different algorithms within the same set.