First of all, the question is phrased incorrectly. The following are equivalent and correct expressions of the intended question:

- why must we use a prime number as the modulo of the hash value (not "in the hashing function)

or equivalently and more succinctly:

- why must the size of a hash table be a prime number?

The proper answer to this question lies in the context, and that context is open addressing with double hashing collision resolution.

Where you compute:

hashval1 = hashfcn1(k) // for initial location

hashval2 = hashfcn2(k) // probing increment

hashval1 is used for the initial insertion probe. If that location is empty, then you insert the (k, v) and you’re done, never using hashval2. If that location is occupied, then you jump hashval2 addresses to (hashval1 + hashval2) % arraysize, and repeat to see if the location is empty. If that location is again not empty, then you jump another hashval2 addresses to (hashval1 + 2 * hashval2) % arraysize. And so on.

Here’s the key: HOW MANY TIMES DO YOU PROBE BEFORE GIVING UP?

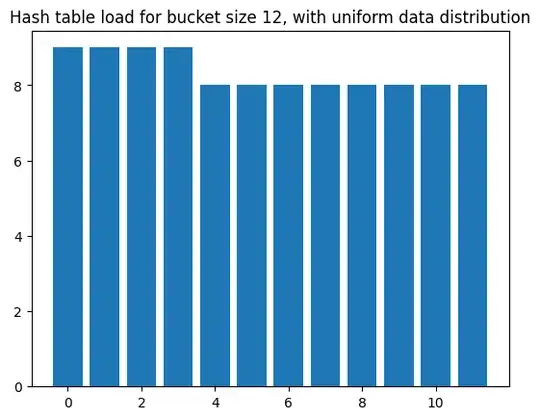

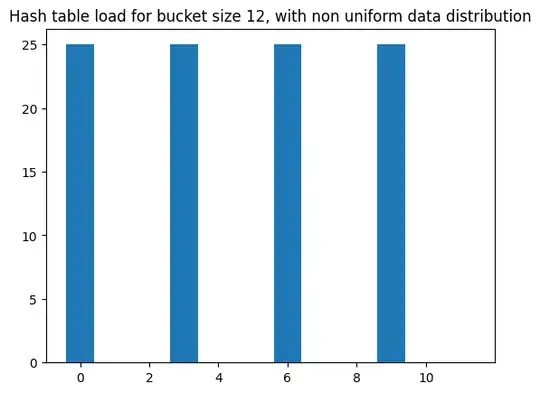

If every element of the array is potentially a target, then the answer is: you probe n-times. This is easy to visualize with linear probing, you write a for (i = 0; i < n; ++i) loop for probing which breaks early if a empty slot is found. It’s no different with double hashing, with the exception that the probe increment is hashval2 rather than 1. But this leads to a problem, you’re not guaranteed to probe every slot in the array when hashval2 > 1. Simple example of this: array size = 10 and hashval2 = 2; in this example you only ever probe the even or odd slots, in fact, you end up probing each slot twice, which is pointless. Even if you make the array size an odd number like 15, if probe increment is 5 then the probe sequence will be (let’s use hashval1 = 0): 0, 5, 10, 0, 5, 10, 0 5, 10, 0, 5, 10, 0, 5, 10. You keep probing the same three occupied slots over and over.

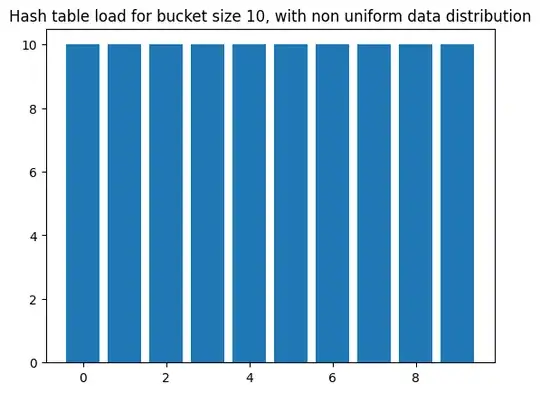

What’s the problem here? The problem is that the hash table size and hashval2 share common factors, in this case 5. So we need the hash table size and hashval2 to share no factors. Since hashval2 is a function of k and post modulo lies in the range of 0 < hashval2 < array size, there’s nothing we can do about hashval2. That leaves the array size. The array size in double hashing must be guaranteed to have no factors in common with hashval2. Thus by simple math, the array size must be a prime number.

Example: Array size = 11 (a prime number). hashval1 = 1.

hashval2 = 1; // 1,2,3,4,5,6,7,8,9,10,0 <-- probing sequence

hashval2 = 2; // 1,3,5,7,9,0,2,4,6,8,10

hashval2 = 3; // 1,4,7,10,2,5,8,0,3,6,9

hashval2 = 4; // 1,5,9,2,6,10,3,7,0,4,8

hashval2 = 5; // 1,6,0,5,10,4,9,3,8,2,7

hashval2 = 6; // 1,7,2,8,3,9,4,10,5,0,6

hashval2 = 7; // 1,8,4,0,7,3,10,6,2,9,5

hashval2 = 8; // 1,9,6,3,0,8,5,2,10,7,4

hashval2 = 9; // 1,10,8,6,4,2,0,9,7,5,3

hashval2 = 10;// 1,0,10,9,8,7,6,5,4,3,2

You always probe a maximum of the number of slots in the hash table; i.e., every slot has an equal chance of hosting a value. But since the probe increment is itself a hash value, it is uniformly distributed over the size of the hash table which eliminates the clustering you get with other collision resolution algorithms.

TL;DR: a hash table size that is a prime number is the ONLY way to guarantee that you do not accidentally re-probe a previously probed location.