(..) would it be viable to allocate a very large amount of memory (perhaps in a long loop) and use the errors that eventually occur as a source of randomness?

No. Practical use of memory errors as a source of randomness would require manipulation of DRAM timing parameters, as envisioned in this answer and it's reference.

The rate of memory error in a correctly working computer at ground level is very low; anything larger than one error detected per week (as reported by machine with ECC logging) is reason for maintenance [#]. Hence the proposed method requires a terminally impractical delay to get any entropy, or an unreliable computer; and for one well built, that mostly happens at altitude (especially in the South Atlantic Anomaly). Plus, if the machine has ECC, errors are hard to detect portably (reporting of ECC errors tends to be a proprietary mess); and if it is not, and there are sizably many errors, it is to fear one will eventually prevent the program from running as intended.

More practical sources of entropy include

- Audio or video input; more generally output of an ADC.

- Time of events measured to high accuracy relative to CPU clock (e.g. by way of a performance counter as obtained by RDTSC instruction or API to that). Sources include:

- key presses (value gives extra entropy)

- mouse/pointing device movement (position gives extra entropy)

- arrival of network packets

- availability of data from a spinning hard disk

- change of second in a real-time clock IC with independent crystal (low entropy rate, but quite reliably entropic)

Extracting the entropy is relatively easy: essentially, hash anything entropic you can get. The one extremely difficult thing is asserting a reliable lower bound of how much entropy was really gathered. Be extremely conservative, and don't assume that something that holds, will. Things change unexpectedly, especially when there's an active adversary, as we assume in crypto.

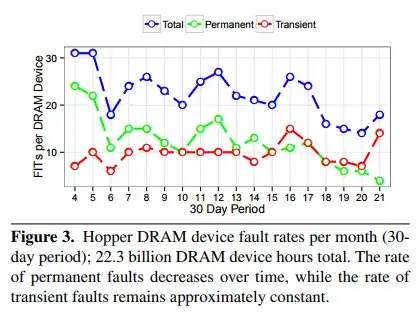

[#] Reliability studies on DRAM give widely varying estimates. However we are talking like 100 FIT par DRAM IC within a factor of 100, where Failure In Time is the expected number of failures per 109 device⋅hour. Taking the upper extreme 104 FIT, a computer with 4 DIMMs each with 18 DRAM ICs (enough for 32 GiB with ECC using DDR4 mainstream by late 2017), we get 1.2 expected failures per week.

My limited field experience with servers is that when there are ECC alarms more often than once per week, there's a cause, most often a mismatch in memory specs or settings (for new machines only) or a particular bit in one particular IC of a particular DIMM that's marginal (or worn out, for machines that have been humming quietly for some time). The error log's main virtue is to help identifying such condition, and what DIMM to replace rather than scrapping the whole lot.

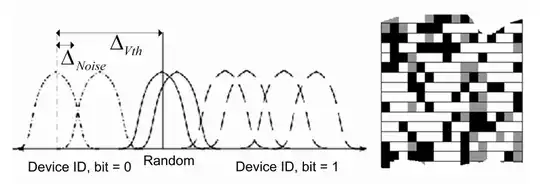

There are techniques to purposely increase the rate of occurrence of DRAM errors; including lowering the refresh rate much below manufacturer specs, and/or using a RowHammer read/write pattern. However that's extremely dependent on DRAM model. Doing this reliably, portably and within an OS is very difficult; there's a reason MemTest wants to own as much of the machine as feasible.