No. There are no common floating point PRNGs.

If you search the literature there are few PRNG that utilises native floating point computation. This not so cunning program illustrates why they are unreliable across different architectures. Try the following on i386 stuff:-

for ($i=0; $i<1000; $i=$i+0.1) {}

print "$i";

The answer is:-

1000.00000000016

The (unexpected) extra 16 part at the end is rounding due to the inability to accurately represent decimal numbers in binary. And this rounding is highly dependant on the underlying hardware /software.

Repeating with:-

i=0

while i<1000:

i=i+0.1

print(i)

I get:-

1000.0000000001588

Where's the 16 bit gone as I added up exactly the same numbers, this time using Python rather than Perl? And on Atmel, the answer is a round 1000.00. A CSPRNG is validated against an output run of mega bytes of data. With such unpredictable rounding errors, it would be difficult to validate on various platforms. As a relevant aside, it's why computer currency calculations are performed as integers.

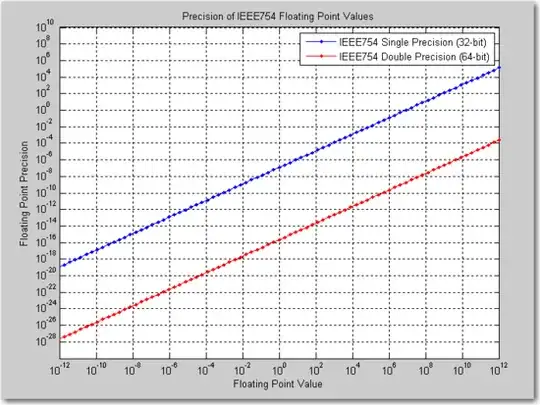

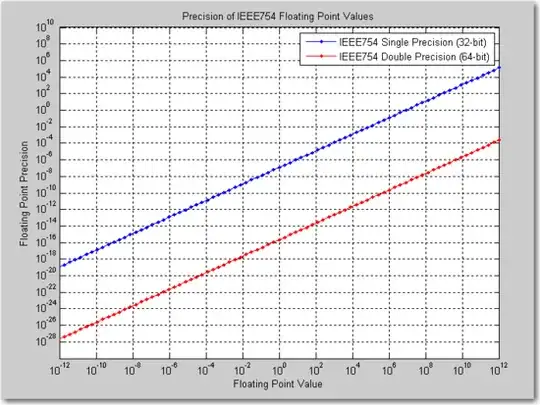

Another unexpected issue with floating point is that bit wise adjacent numbers aren't the same numerical distance apart. One single adjacent bit change can alter a floating point number by a difference that can vary by an astonishing 24 orders of magnitude. This is better represented with picture like so:-

Generators like RC4 and ISAAC (to name two) are entirely based around array indirection functions, and array indices have no meaning unless they're integers. Commonly used left and right bit manipulations also become meaningless on a floating point number as the exponents would break storage conventions.

Of course you can always roll your own thing that will produce random looking output. So a construction using a hash of the output of my example would produce a cryptographic ally irreversible number stream. So you'd have:-

Hash(Perl program output).

However, the rounding error will bite you as it will form a deterministic (for your specific machine) error bias. So the number stream will be useless for all but the most trivial of applications. So speed is kinda irrelevant. There aren't any CSPRNGs out there.