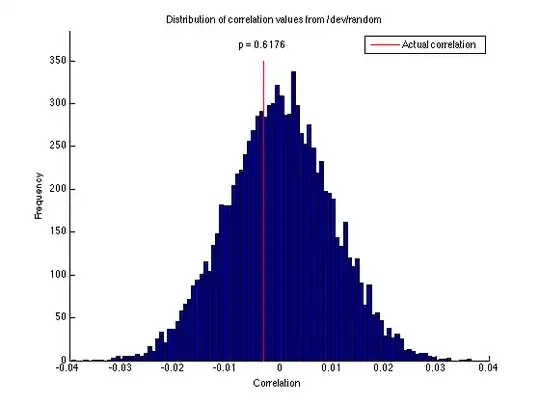

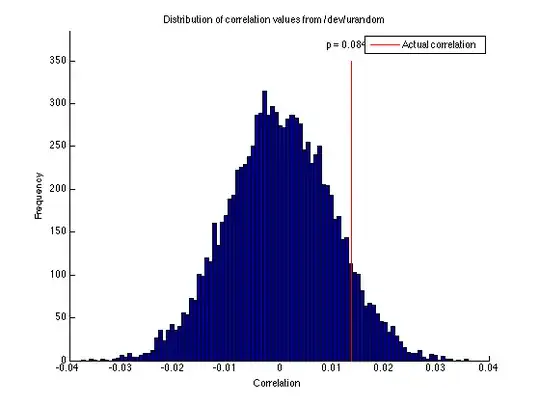

This question is inspired by a RNG question and some of the comments in the one of the answers. I have been designing random number generators in hardware recently and my results are significantly better than what I get from /dev/random and /dev/urandom. I took a sample of 10000 results from /dev/random and /dev/urandom and the results are as follows:

I ran this a few dozen times and the results are about the same with /dev/random being better than /dev/urandom, but that's not the crux of my curiosity.

Mathematically, of course, we want a completely decorrelated sequence of numbers for a random set. My question is if there is a maximum value for correlation in a set of random numbers that is acceptable? From the hardware standpoint, I can save a lot of power if I have slightly less random numbers and this is a very appealing idea for me. I don't know if it matters, but I use my random number generator for a ECC engine.

Update It seems that my /dev/random and /dev/urandom differences were outputs from the same device, but just with less entropy. My working theory is that the second read from the device has less entropy, but more than the OS minimum requirement. However, my question on the maximum correlation is still how much is the maximum acceptable correlation in a series of "random" numbers.