I'll try to take a stab at this. From Apple's iOS Security Guide, we learn that

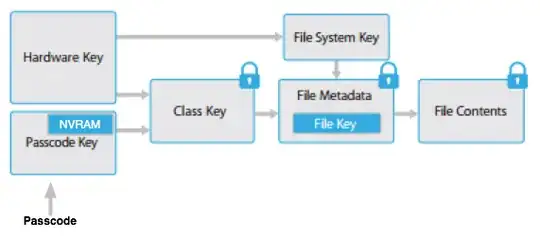

The metadata of all files in the file system is encrypted with a random key, which

is created when iOS is first installed or when the device is wiped by a user. The file

system key is stored in Effaceable Storage. Since it’s stored on the device, this key is

not used to maintain the confidentiality of data;

and

The content of a file is encrypted with a per-file key, which is wrapped with a class key

and stored in a file’s metadata, which is in turn encrypted with the file system key. The

class key is protected with the hardware UID and, for some classes, the user’s passcode.

This hierarchy provides both flexibility and performance. For example, changing a file’s

class only requires rewrapping its per-file key, and a change of passcode just rewraps

the class key.

In other words: By physically being in possession of the device, you should easily able to get the file system key (take out the SSD and read the key off it). Let's call the file system key $K_{FS}$. Additionally, every file $f$ is encrypted using a per file key $K_f$. To get $K_f$, we need to have the file system key ($K_{FS}$) and the "class key", let's call the class key $K_C$.

So, there's a function $F_1$ which given the file, file-system key, the class key gives you the file key. $K_f$ =$F_1(f, K_{FS}, K_C)$. That means that for the FBI to decrypt a specific file, they'd need:

So they don't have $K_C$ afaik. Right, how do you normally get that class key? The document says, it's protected by the "hardware UID" ($K_{UID}$) and (sometimes) the user's passcode $K_P$. Alas, there's another function $F_2(K_{UID}, K_P)$ which given the hardware UID and (sometimes) the passcode returns you the class key.

Assuming the FBI can easily brute force the passcode ($K_P$), all they need is the hardware UID ($K_{UID}$) but unfortunately for them that is apparently put in the silicon and therefore hard to access:

The device’s unique ID (UID) and a device group ID (GID) are AES 256-bit keys fused

(UID) or compiled (GID) into the application processor and Secure Enclave during

manufacturing. No software or firmware can read them directly; they can see only the

results of encryption or decryption operations performed by dedicated AES engines

implemented in silicon using the UID or GID as a key. Additionally, the Secure Enclave’s

UID and GID can only be used by the AES engine dedicated to the Secure Enclave. The

UIDs are unique to each device and are not recorded by Apple or any of its suppliers.

The GIDs are common to all processors in a class of devices (for example, all devices

using the Apple A8 processor), and are used for non security-critical tasks such as when

delivering system software during installation and restore. Integrating these keys into

the silicon helps prevent them from being tampered with or bypassed, or accessed

outside the AES engine. The UIDs and GIDs are also not available via JTAG or other

debugging interfaces.

The UID allows data to be cryptographically tied to a particular device. For example,

the key hierarchy protecting the file system includes the UID, so if the memory chips

are physically moved from one device to another, the files are inaccessible. The UID is

not related to any other identifier on the device.

So, if I read that correctly, the function $F_2$ seems to be implemented in hardware and is accessible as $F_2'(K_P)$. Note that $F_2'$ only has one parameter which is the user's pass code. In other words $F_2'(K_P) = F_2(K_{UID}, K_P)$, so the hardware UID is provided automatically by the hardware. In non-Secure Enclave iPhones (like the 5C), you can probably brute force it with a custom iOS version that let's you use $F_2'$ as often as you want. In a normal iOS, only the lock screen can presumably run $F_2'$ and prevents you from doing that without ever increasing delays (and potential eventual device wipe). With a Secure Enclave iPhone (5S, 6 (+), 6S (+)) the secure enclave prevents you from running this function repeatedly. So not even the iOS kernel can run $F_2'$ without any delays.

Given that the iPhone in question seems to be a 5C, the FBI only seems to need a special version of iOS from Apple which allows them to use $F_2'$ repeatedly without delays with automatically provided pass codes. That should give the FBI 10000 (assuming a 4 digit pass code) or 1000000 (assuming a 6 digit pass code) "class keys". They can then just try each of the class keys and one of them will give them the data.

It is irrelevant for this particular question but I think it would still be interesting: Does the Secure Enclave change anything here? At the first sight: Obviously yes because a new iOS version wouldn't be good enough because the Secure Enclave would prevent you from running $F_2'$ in a loop by brute forcing the pass code. BUT: The secure enclave runs some kind of firmware too, so this limit could probably be lifted but updating the Secure Enclave. So far it's unclear to me whether Apple can do that without wiping all the content from the Secure Enclave. Different sources claim different things. This article covers that. Especially it cites a tweet from John Kelley who was apparently formerly within Embedded Security at Apple. He claims

[...] I have no clue where they got the idea that changing SPE firmware will destroy keys. SPE FW is just a signed blob on iOS System Part

In other words: Even with a newer iPhone Apple could just deploy a special firmware to the Secure Enclave (SPE) which would allow to run $F_2'$ as often as the FBI pleases.

I hope this helps. All the information is taken from the iOS Security Guide and my interpretation of it.

edit: Because of the comment how my answer answer the question I should indeed state that more clearly. IMHO the FBI asks Apple because the need to run $F_2'$ very often without delays so they can brute force the iPhone's code. For the iPhone 5C a new version of iOS should be enough as only the OS prevents you from doing that (you don't have programmatic access to $F_2'$ without special privileges). For newer iPhones, the Secure Enclave does prevent that apparently. The FBI can't compile their own, hacked version of iOS without this restriction because the iPhone only runs code signed by Apple and the FBI does (probably) not have Apple's signing keys.

Please do comment if you know or think that I misinterpreted anything or got anything wrong.