I am reading through Brady Neal's "Introduction to Causality" course textbook and have got to Section 3.6 where Berkson's paradox is discussed. Neal provides the following toy example:

$$ X_{1} = \mathcal{N}(0,1) \\ X_{3} = \mathcal{N}(0,1) \\ X_{2} = X_{1} + X_{3} $$

He then proceeds to compute the covariance of $X_{1}$ and $X_{3}$ as a sanity check:

$$ \text{Cov}(X_{1}, X_{3}) = \mathbb{E}[X_{1}X_{3}] - \mathbb{E}[X_{1}]\mathbb{E}[X_{3}] = \mathbb{E}[X_{1}X_{3}] = \mathbb{E}[X_{1}]\mathbb{E}[X_{3}] = 0 $$

where we used independence. Next Neal computes the conditional covariance given that $X_{2} = x$.

$$ \text{Cov}(X_{1}, X_{3} \,|\, X_{2} = x) = \mathbb{E}[X_{1}X_{3} \,|\, X_{2} = x] = \mathbb{E}[X_{1}(x - X_{1})] = x\mathbb{E}[X_{1}] - \mathbb{E}[X^{2}_{1}] = -1 $$

Is this correct?

When I do my own calculation I seem to get the following result:

$$ \text{Cov}(X_{1}, X_{3} \,|\, X_{2} = x) = \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] - \mathbb{E}[X_{1} \,|\,X_{2}=x]\mathbb{E}[X_{3}\,|\,X_{2}=x] $$

Consider each factor separately in the second term:

$$ \mathbb{E}[X_{1} \,|\, X_{2} = x] = \mathbb{E}[X_{1} \,|\, X_{1} + X_{3} = x] = \mathbb{E}[x - X_{3}] = x - \mathbb{E}[X_{3}] $$

Likewise we have

$$ \mathbb{E}[X_{3} \,|\, X_{2} = x] = x - \mathbb{E}[X_{1}] $$

Multiplying both terms we have:

$$ \mathbb{E}[X_{1} \,|\,X_{2}=x]\mathbb{E}[X_{3}\,|\,X_{2}=x] = (x - \mathbb{E}[X_{3}])(x - \mathbb{E}[X_{1}]) = x^{2} $$

Now consider the first term:

$$ \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] = \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] = \mathbb{E}[X_{1}X_{3}\,|\, X_{1} + X_{3} = x] = \\ \mathbb{E}[X_{1}(x - X_{1})] = x\mathbb{E}[X_{1}] - \mathbb{E}[X_{1}^{2}] = 0 - 1 = -1 $$

Putting everything together we have:

$$ \text{Cov}(X_{1}, X_{3} \,|\, X_{2} = x) = \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] - \mathbb{E}[X_{1} \,|\,X_{2}=x]\mathbb{E}[X_{3}\,|\,X_{2}=x] = -1 - x^{2} $$

Am I doing something wrong? I am concerned the author is forgetting that the expectations in the second term are conditional leading them to set the second term to zero as in the unconditioned case. I may also be using the wrong definition for conditional covariance, although no explicit definition is provided in the book.

Note that this example is an attempt to model a collider where $X_{1}$ and $X_{3}$ are parents of $X_{2}$.

EDIT: Both myself and the textbook are wrong!

Thanks to Henry for pointing this out, whose answer I have accepted below. I thought I would correct my approach using Henry's working to highlight my errors.

As before we have:

$$ \text{Cov}(X_{1}, X_{3} \,|\, X_{2} = x) = \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] - \mathbb{E}[X_{1} \,|\,X_{2}=x]\mathbb{E}[X_{3}\,|\,X_{2}=x] $$

Let's deal with the second term first. Clearly we have:

$$ \mathbb{E}[X_{1} \,|\,X_{2}=x]\mathbb{E}[X_{1}\,|\,X_{2}=x] = \mathbb{E}[X_{1}\,|\,X_{2}=x]^{2} $$

Applying the first formula derived by Henry in this question we have

$$ \mathbb{E}[X_{1}\,|\,X_{2}=x]^{2} = \frac{x^{2}}{4} $$

Now for the first term we have

$$ \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] = \mathbb{E}[(x-X_{3})X_{3}\,|\, X_{2} = x] = x\mathbb{E}[X_{3}\,|\, X_{2} = x] - \mathbb{E}[X_{3}^{2}\,|\, X_{2} = x] $$

Note how the conditional in the expectation remains as $X_{3}$ is still conditioned on $X_{2}$. This is what caused the issue with my analysis! Following a similar logic as above with $X_{3}$ in place of $X_{1}$ we have:

$$ \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] = \frac{x^{2}}{2} - \mathbb{E}[X_{3}^{2}\,|\, X_{2} = x] $$

Adding and subtracting $\mathbb{E}[X_{3}\,|\,X_{2} = x]^{2}$ we have

$$ \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] = \frac{x^{2}}{2} - (\mathbb{E}[X_{3}^{2}\,|\, X_{2} = x] - \mathbb{E}[X_{3}\,|\,X_{2} = x]^{2}) - \mathbb{E}[X_{3}\,|\,X_{2} = x]^{2} $$

Observe that the term in the brackets is simply the conditional variance of $X_{3}$. Hence, using the second identity provided by Henry in the aforementioned question we have:

$$ \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] = \frac{x^{2}}{2} - \frac{1}{2} - \mathbb{E}[X_{3}\,|\,X_{2} = x]^{2} $$

Recall that we calculated the leftover term (with $X_{1}$ in place of $X_{3}$. Plugging in our solution we have:

$$ \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] = \frac{x^{2}}{2} - \frac{1}{2} - \frac{x^{2}}{4} = \frac{x^{2}}{2} - 1/2 $$

Putting everything together we end up with:

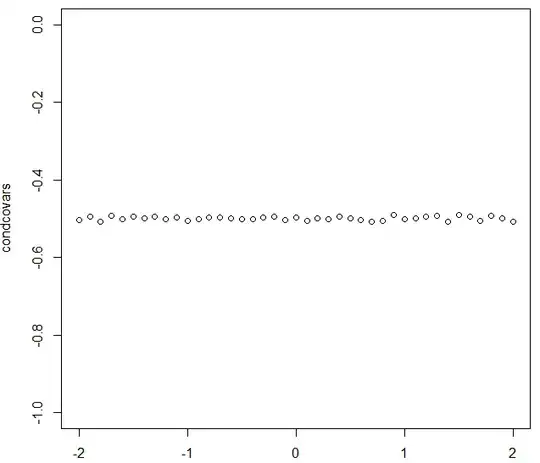

$$ \text{Cov}(X_{1}, X_{3} \,|\, X_{2} = x) = \mathbb{E}[X_{1}X_{3}\,|\, X_{2} = x] - \mathbb{E}[X_{1} \,|\,X_{2}=x]\mathbb{E}[X_{3}\,|\,X_{2}=x] \\ = \frac{x^{2}}{2} - 1/2 - \frac{x^{2}}{4} = -1/2 $$

Finally, we arrive at the correct result! Note that I have left some details out regarding how the conditional expectations and variances used from Henry's question are calculated. Although, I believe a question which presents the working for a similar problem is linked there. I may add these derivations later but for now I am happy to assume that Henry is a divine oracle capable of correctly computing the conditional moments of normal distributions :).