The definition in my book is as follows:

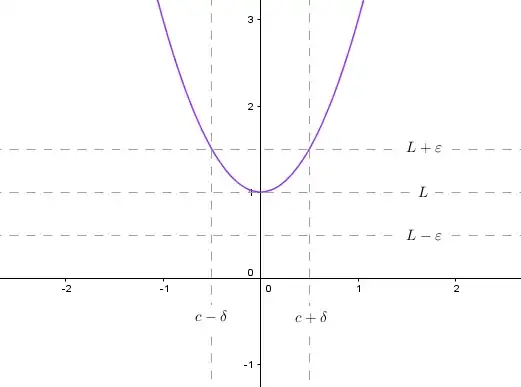

Let $f$ be a function defined on an open interval containing $c$ (except possibly at $c$) and let $L$ be a real number. The statement $$\lim_{x \to c} f(x) = L$$

means that for each $\epsilon>0$ there exists a $\delta>0$ such that if $0<|x-c|<\delta$, then $|f(x)-L|<\epsilon$.

With the definition the way it is, I don't see how choosing a smaller and smaller $\epsilon$ implies a smaller and smaller $\delta$.

To me, in order to produce that implication, we would need to restrict $\epsilon$ to be small enough to force $f(x)$ to be strictly increasing/decreasing on $(L-\epsilon, L+\epsilon)$, and define increasing/decreasing without the use of derivatives. However, that is not part of the definition.

P.S. Please refrain from using too much notation for logic, I am not familiar with most of the symbols such as the upside down A and such.