In a decision tree, Gini Impurity[1] is a metric to estimate how much a node contains different classes. It measures the probability of the tree to be wrong by sampling a class randomly using a distribution from this node:

$$ I_g(p) = 1 - \sum_{i=1}^J p_i^2 $$

If we have 80% of class C1 and 20% of class C2, labelling randomly will then yields 1 - 0.8 x 0.8 - 0.2 x 0.2 = 0.32 Gini impurity value.

However, assigning randomly a class using the distribution seems like a bad strategy compared with simply assigning the most represented class in this node (in above example, you would just label C1 all the time and get only 20% of error instead of 32%).

In that case, I would be tempted to simply use this as a metric, since it is also the probability of mislabeling :

$$ I_m(p) = 1 - \max_i [ p_i] $$

Is there a deeper reason to use Gini and/or a good reason not to use this approach instead ? (In other words, Gini seems to over-estimate the mislabellings that will happen, isn't it ?)

[1] https://en.wikipedia.org/wiki/Decision_tree_learning#Gini_impurity

EDIT: Motivation

Suppose you have two classes $C_1$ and $C_2$, with probabilities $p_1$ and $p_2$ ($1 \ge p_1 \ge 0.5 \ge p_2 \ge 0$, $p_1 + p_2 = 1$).

You want to compare strategy "always label $C_1$" with strategy "label $C_1$ with $p_1$ probability, and $C_2$ with $p_2$ probability", thus the probability of success are respectively $p_1$ and $p_1^2 + p_2^2$.

We can rewrite this second one to:

$$ p_1^2 + p_2^2 = p_1^2 + 2p_1p_2 - 2p_1p_2 + p_2^2 = (p_1 + p_2)^2 - 2p_1p_2 = 1 - 2p_1p_2 $$

Thus, if we substract it to $p_1$:

$$ p_1 - 1 + 2p_1p_2 = 2p_1p_2 - p_2 = p_2 ( 2p_1 - 1) $$

Since $p_1 \ge 0.5$, then $p_2 ( 2p_1 - 1) \ge 0$, and thus:

$$ p_1 \ge p_1^2 + p_2^2 $$

So choosing the class with highest priority is always a better choice.

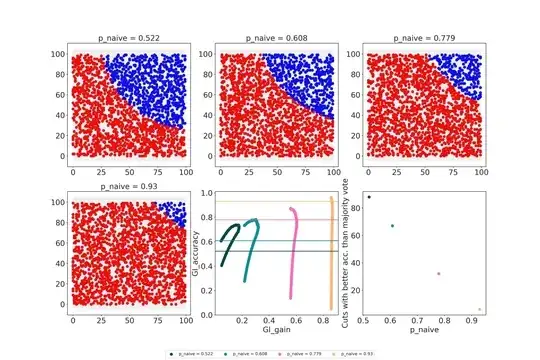

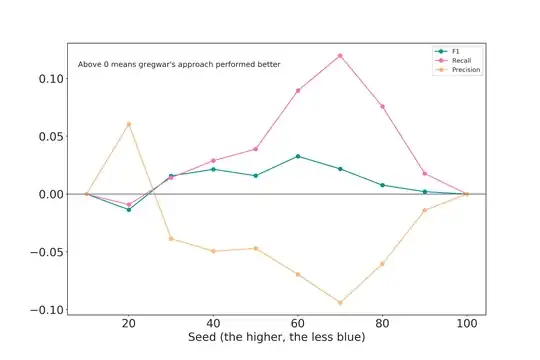

EDIT: Choosing an attribute

Suppose now we have $n_1$ items in $C_1$ and $n_2$ items in $C_2$. We have to choose which attribute $a \in A$ is the best to split the node. If we use superscript $n^v$ for number of items that have a value $v$ for a given attribute (and $n^v_1$ items of $C_1$ that have value $v$), I propose we use the score:

$$ \sum_v \frac{n^v}{n_1 + n_2} \frac{max(n^v_1, n^v_2)}{n^v_1 + n^v_2} $$

As a criterion instead of Gini.

Note, since $n^v = n^v_1 + n^v_2$ and $n_1 + n_2$ doesn't depend on the choosen attribute, this can be rewritten:

$$ \sum_v max(n^v_1, n^v_2) $$

And simply interpreted as the number of items in the dataset that will be properly classified.