I am trying to use the MNIST dataset to train a convolutional neural network to classify digits written in colorblindness charts. As some people have suggested, I have tried playing with the brightness and contrast, as well as converting to grayscale, but all of these have inconsistent results because all charts are very different.

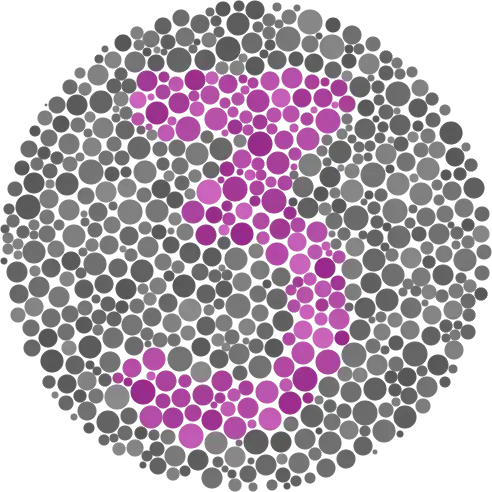

I am looking for general ideas about approaches I can try. Would style transfer make sense? Here is an example of an easy chart I want to be able to classify:

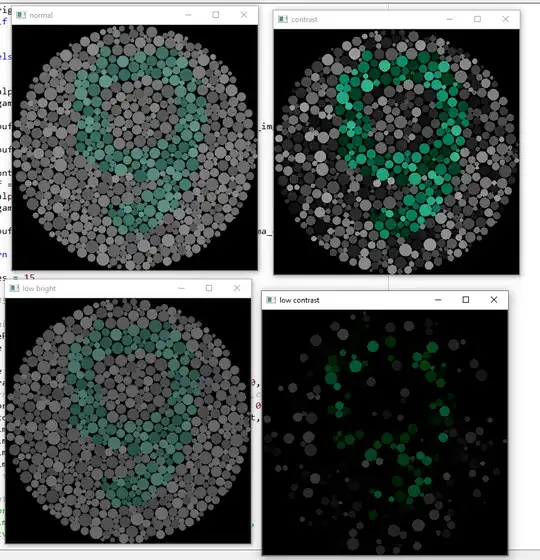

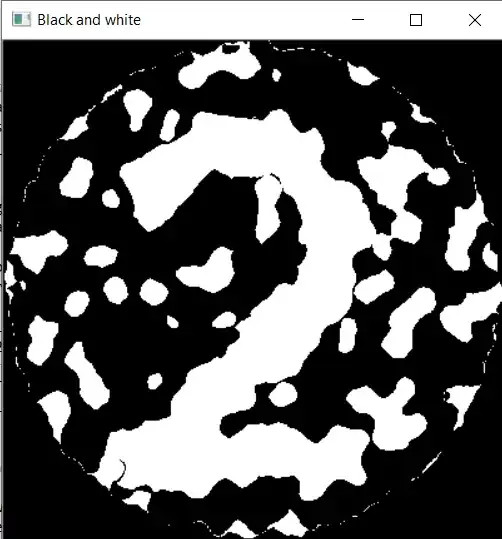

I tried various transformations and got results such as these. Although to me, a mildly colorblind person, these are easier to see, it is not nearly close to MNIST.

TLDR: I don’t have a dataset of color blindness charts. MNIST is readily available however. I’m trying to somehow use MNIST (probably after transforming it) to make the neural network classify the limited color blindness charts I have. MNIST and the charts are different and I need to make them similar so that the NN after training on modified MNIST can predict color blindness charts.

EDIT:

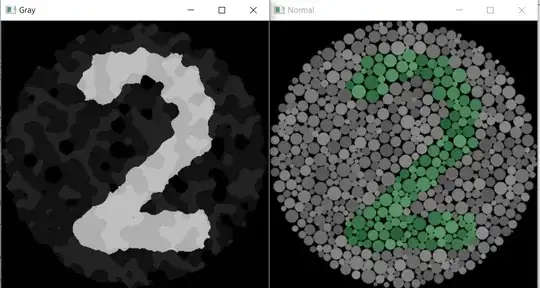

I tried the suggestions and applied various OpenCV Transformations to images. At the end, everything is resized and converted to grayscale. My accuracy went up from 11% (on non processed images) to 33% on the new images.

My problem is finding a set of transformations that are universal. My transformations work well for some images:

How can I improve this? Here are the transformations I do:

image = cv2.imread(imagePath)

image = imutils.resize(image, height=400)

contrasted_img = CONTRASTER.apply(image, 0, 60) #applies contrast

median = cv2.medianBlur(contrasted_img,15)

blur_median = cv2.GaussianBlur(median,(3,3),cv2.BORDER_DEFAULT)

clustered = CLUSTERER.apply(blur_median, 5) #k-means clustering with 5

gray = cv2.cvtColor(clustered, cv2.COLOR_BGR2GRAY) #RESULT

SECOND EDIT:

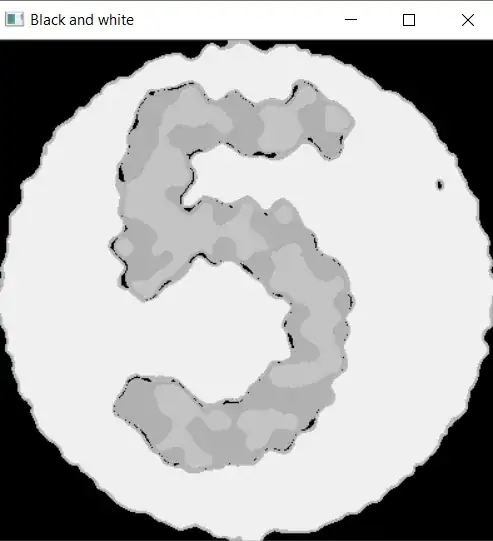

I used written advice and calculated that the digit usually takes up between 0.1 and 0.35 ish of the image. So I increase threshold for black and white until that happens. This resulted in images like this:

My accuracy of the NN went up to 45%! Another amazing improvement. However, my biggest issue is with images where the digit is darker than the background:

This results in incorrect thresholds. I also have some noise issues, but these are less common and can be fixed with tuning my percent white and threshold: