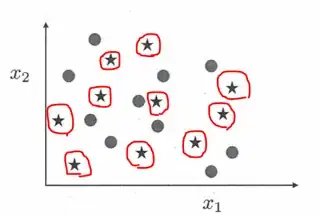

Suppose I have some data set with two classes. I could draw a decision boundary around each data point belonging to one of these classes, and hence, separate the data, like so:

Where the red lines are the decision boundaries around the data points belonging to the star class.

Obviously this model overfits really badly, but nevertheless, have I not shown that this data set is separable?

I ask because in an exercise book, a question asks "Is the above data set separable? If it is, is it linearly separable or non-linearly separable?"

I would say "Yes it is separable, but non-linearly separable."

No answers are provided, so I'm not sure, but I think my logic seems reasonable.

The only exception I see is when two data points belong to different classes, but have identical features. For instance, if one of the stars in the figure above was perfectly overlapping one of the circles. I suppose this is quite rare in practise. Hence, I ask, is nearly all data separable?