For a recommendation system I'm using cosine similarity to compute similarities between items. However, for items with small amounts of data I'd like to bin them under a general "average" category (in the general not mathematical sense). To accomplish this I'm currently trying to create a synthetic observation to represent that middle of the road point.

So for example if these were my observations (rows are observations, cols are features):

[[0, 0, 0, 1, 1, 1, 0, 1, 0],

[1, 0, 1, 0, 0, 0, 1, 0, 0],

[1, 1, 1, 1, 0, 1, 0, 1, 1],

[0, 0, 1, 0, 0, 1, 0, 1, 0]]

A strategy where I'd simply take the actual average of all features across observations would generate a synthetic datapoint such as follows, which I'd then append to the matrix before doing the similarity calculation.

[ 0.5 , 0.25, 0.75, 0.5 , 0.25, 0.75, 0.25, 0.75, 0.25]

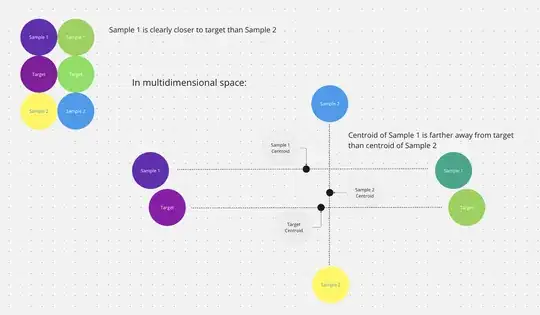

While this might work well with certain similarity metrics (e.g. L1 distance) I'm sure there are much better ways for cosine similarity. Though, at the moment, I'm having trouble reasoning my way through angles between lines in high dimensional space.

Any ideas?