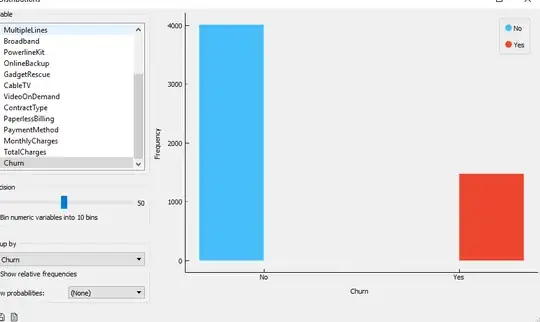

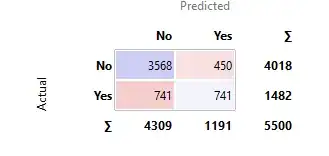

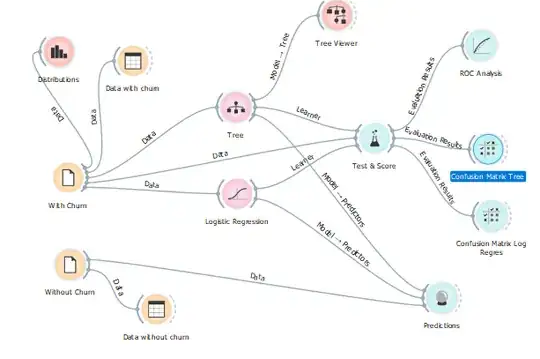

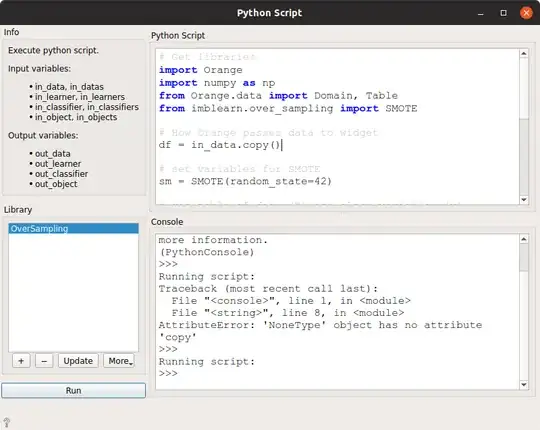

Orange Data mining not suitable for most task due lacking features. U can use it if u are strong python coder, coz u have to add all those missing features in script widgets. Imbalanced datasets are not exception but reality. Orange don't handle them well, thus such AUC's no matter which regressor u might use, u get poor AUC on validation data as finding results by chance only. I have tested it a lot. I use optuna (cli tool) to determine HP on CatBoost with SMOTE - optuna provides high AUC. Then test HP on validation data, results - poor AUC around 50% model finds hits by chance only. ROC_AUC is poor metric on train and test data and says nothing about unseen data. The more imbalanced dataset u have the more poor AUC will be and always u get higher AUC on majority class(i haven't seen raw balanced data set ever). The only strength that Orange have is easy data manipulation and visualizations. Use Keras, PyTorch or H2O instead. Those should be integrated in Orange by my opinion, but they are not. Sadly if u want more out of data mining, coding is the only choice, chose some IDE with AI assistant, but AI assistants are also dumb in coding, u get about 30/70% usable help out of those many are commercial, some not but use commercial API keys, some IDE's have GUI's over blown beyond end user use of, so a lot of hurdles here .... and almost every time u might require pro help, u wont solve it alone ...