I am just getting touch with Multi-layer Perceptron. And, I got this accuracy when classifying the DEAP data with MLP. However, I have no idea how to adjust the hyperparameters for improving the result.

Here is the detail of my code and result:

from sklearn.neural_network import MLPClassifier

import numpy as np

import scipy.io

x_vals = data['all_data'][:,0:320]

y_vals_new = np.array([0 if each=='Neg' else 1 if each =='Neu' else 2 for each in data['all_data'][:,320]])

y_vals_Arousal = np.array([3 if each=='Pas' else 4 if each =='Neu' else 5 for each in data['all_data'][:,321]])

DEAP_x_train = x_vals[:-256] #using 80% of whole data for training

DEAP_x_test = x_vals[-256:] #using 20% of whole data for testing

DEAP_y_train = y_vals_new[:-256] ##Valence

DEAP_y_test = y_vals_new[-256:]

DEAP_y_train_A = y_vals_Arousal[:-256] ### Arousal

DEAP_y_test_A = y_vals_Arousal[-256:]

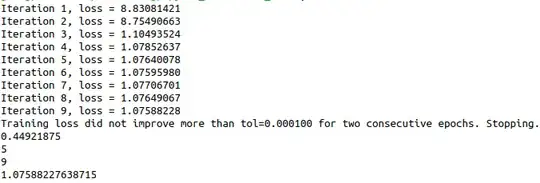

mlp = MLPClassifier(solver='adam', activation='relu',alpha=1e-4,hidden_layer_sizes=(50,50,50), random_state=1,max_iter=11,verbose=10,learning_rate_init=.1)

mlp.fit(DEAP_x_train, DEAP_y_train)

print (mlp.score(DEAP_x_test,DEAP_y_test))

print (mlp.n_layers_)

print (mlp.n_iter_)

print (mlp.loss_)