Media's explanation is true for regression problems. These are problems where you predict a continuous target variable.

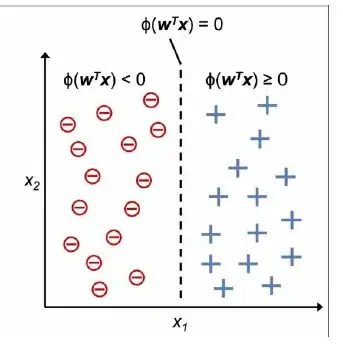

Your image shows a classification problem. Here, the target variable takes only two values (typically -1 and 1 in the Perceptron algorithm).

In that case, an optimal solution $w^*$ is a vector of weights that perfectly separates both classes.

If such a solution exists, the Perceptron algorithm will find it. But: If there is one optimal solution, there are usually infinitely many other optimal solutions. You can easily see this in your image: You can move the line a little to the left or to the right, and you can rotate it a little, and it still perfectly separates the classes.

So while the Perceptron algorithm will find an optimal solution if there is one, you cannot know which one it will find. That depends on the random starting parameters.

This is different e.g. for support vector machines. Here, there is either no optimal solution or exactly one optimal solution.