import org.apache.spark.SparkContext

import org.apache.spark.SparkConf

import org.apache.spark.sql.cassandra.CassandraSQLContext

object Test {

val sparkConf = new SparkConf(true).set("spark.cassandra.connection.host", <Cassandra Server IP>)

val sc = new SparkContext(sparkConf)

val cassandraSQLContext = new CassandraSQLContext(sc)

val numberAsString = cassandraSQLContext.sql("select * from testing.test").first().getAs[Int]("number").toString()

val testRDD = sc.parallelize(List(0, 0))

val newRDD = testRDD.map { x => numberAsString }

}

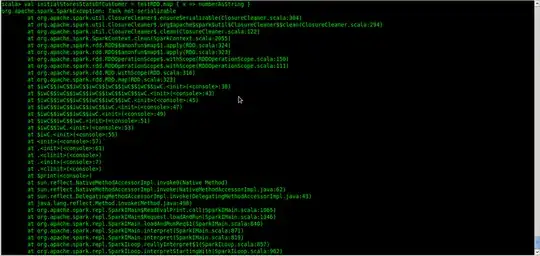

This is the code that I have written in Spark. I was expecting it to work properly because i am evaluating the numberAsString value and then using it in the map function but it gives me task not serializable error. I am running the job in local mode.