I’m currently doing some research on methods that tackle the intent classification and slot filling problems in NLP. One of the approaches with which I choose to start experimenting is proposed in the following paper:

https://arxiv.org/abs/1609.01454

In this paper, the network is trained jointly for both tasks. The encoder-decoder architecture can be observed below:

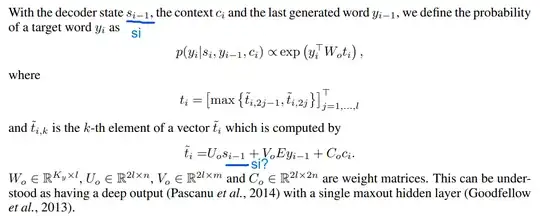

The encoder is a bidirectional LSTM and the decoder is another LSTM. Due to the joint learning of both tasks, there is one decoder for intent classification and another decoder for slot labels prediction. At each timestep in the decoding phase, the block receives the previous hidden state, previous generated token (or correct token during training) and a context vector from an attention operation with all encoder’s hidden states. There are also some points worth noticing for implementation:

The last hidden state of the backward LSTM in the encoder is used as the initial context for the decoder (same with last cell state), following the paper “Neural Machine Translation by Jointly Learning to Align and Translate”. For the output layer, the current hidden state, cell state (context) and previous output token are all linearly projected and maxed out before being projected again to produce probability classes of slot labels, as mentioned in the paper above.

Regarding this paper implementation, among the available implementations on GitHub, I found the following with the highest number of stars:

https://github.com/DSKSD/RNN-for-Joint-NLU/tree/master

However, I think the repo has some issues with the attention mechanism and the initialization for the decoder and the entire network’s weights. Thus, I refine the repo temporarily as the following notebook:

https://www.kaggle.com/code/minhtucanh/rnn4jointnlu

Although this notebook isn’t quite organized yet, I applied some modifications to the Encoder, Decoder and the training procedure.

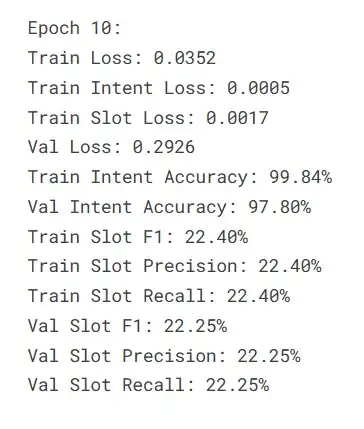

However, as you can see, while the intent classification performance is improving extensively, the slot filling F1 remains the same throughout training and evaluation (despite teacher forcing being used).

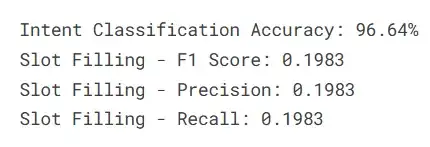

The testing result is poor:

I have looked at it for a while but still don’t understand. I'm quite unsure what to do next to understand where the issues come from. I would appreciate any help or comments on this!

Thanks.