This is a MWE of my problem, basically I want to find out the map between qin and qout using a Gaussian process and with that model trained, test the prediction of some validation data qvalin against qvalout.

tensors.pt: https://drive.google.com/file/d/1LwYgEGqRRBPurIh8Vrb7f_lK-C9q0eQ_/view?usp=drive_link

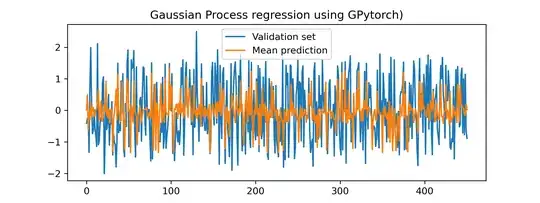

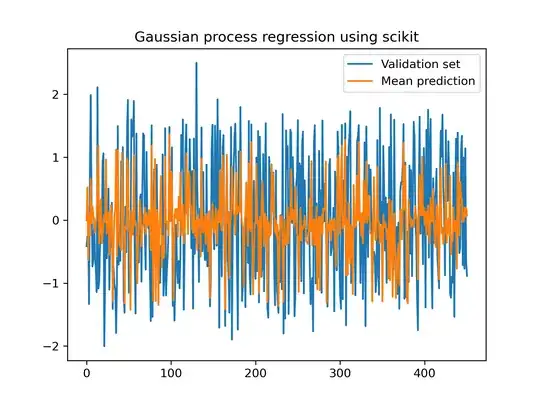

I have left all default hyperparameters, except the learning rate. I haven't been able to lower the error below 92 % for either GPytorch or scikit-learn. I did some optimization but couldn't find a good combination of hyperparameters. Is there anything I am not doing correctly?

import os

import glob

import pdb

import numpy as np

import matplotlib.pyplot as plt

import time

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import RBF

import torch

import gpytorch

import torch.optim as optim

from models_GP import MultitaskGPModel

def main():

t1 = time.time()

ten = torch.load('tensors.pt')

qin = ten['qin']

qout = ten['qout']

qvalin = ten['qvalin']

qvalout = ten['qvalout']

# Rescaling

qin_mean = qin.mean(dim=0)

qin = qin - qin_mean

qin = torch.divide(qin,qin.std(dim=0))

qout_mean = qout.mean(dim=0)

qout = qout - qout_mean

qout = torch.divide(qout,qout.std(dim=0))

qvalin_mean = qvalin.mean(dim=0)

qvalin = qvalin - qvalin_mean

qvalin = torch.divide(qvalin,qvalin.std(dim=0))

qvalout_mean = qvalout.mean(dim=0)

qvalout = qvalout - qvalout_mean

qvalout = torch.divide(qvalout,qvalout.std(dim=0))

qin = qin.reshape(-1, 1)

#qout = qout.reshape(-1, 1)

qvalin = qvalin.reshape(-1, 1)

#qvalout = qvalout.reshape(-1, 1)

# Scikit

t1 = time.time()

kernel = 1 * RBF(length_scale=1.0, length_scale_bounds=(1e-2, 1e2))

gaussian_process = GaussianProcessRegressor(kernel=kernel, n_restarts_optimizer=9)

gaussian_process.fit(qin, qout)

gaussian_process.kernel_

mean_prediction, std_prediction = gaussian_process.predict(qvalin, return_std=True)

print('Optimization time: {}'.format(time.time() - t1))

plt.plot(qvalout, label=r"Validation set", )

plt.plot(mean_prediction, label="Mean prediction")

print(f'´Norm of diff: {100*np.linalg.norm(mean_prediction - qvalout.numpy()) / np.linalg.norm(qvalout)}%')

plt.legend()

_ = plt.title("Gaussian process regression using scikit")

plt.savefig('scikit_.png', dpi=300)

plt.show()

# GPytorch

num_tasks = 1

likelihood = gpytorch.likelihoods.MultitaskGaussianLikelihood(num_tasks)

model = MultitaskGPModel(qin, qout, likelihood)

model.train()

likelihood.train()

opt = torch.optim.Adam(model.parameters(), lr=1, betas=(0.9, 0.999),weight_decay=0)

mll = gpytorch.mlls.ExactMarginalLogLikelihood(likelihood, model)

training_iter = 20

scheduler = optim.lr_scheduler.ReduceLROnPlateau(opt, mode='min', factor=0.5, patience=100, verbose=True)

for i in range(training_iter):

opt.zero_grad()

output = model(qin)

loss = -mll(output, qout)

loss.backward()

print('Iter %d/%d - Loss: %.3f' % (i + 1, training_iter, loss.item()))

opt.step()

print('Optimization time: {}'.format(time.time() - t1))

model.eval()

likelihood.eval()

f, (y1_ax) = plt.subplots(1, 1, figsize=(8, 3))

with torch.no_grad(), gpytorch.settings.fast_pred_var():

test_x = qvalin

test_x_out = qvalout

predictions = likelihood(model(test_x))

mean = predictions.mean

lower, upper = predictions.confidence_region()

y1_ax.plot(test_x_out.numpy(), label='Validation set')

y1_ax.plot(mean.numpy(), label='Mean prediction')

plt.legend()

print(f'Norm of diff: {100 * np.linalg.norm(mean.numpy() - test_x_out.numpy()) / np.linalg.norm(test_x_out.numpy())}%')

y1_ax.set_title('Gaussian Process regression using GPytorch)')

plt.savefig('gpytorch_.png', dpi=300)

plt.show()

if name == "main":

main()

models_GP.py

class MultitaskGPModel(gpytorch.models.ExactGP):

def __init__(self, train_x, train_y, likelihood):

super(MultitaskGPModel, self).__init__(train_x, train_y, likelihood)

self.mean_module = gpytorch.means.MultitaskMean(

gpytorch.means.ConstantMean(), num_tasks=1

)

self.covar_module = gpytorch.kernels.MultitaskKernel(

gpytorch.kernels.RBFKernel(), num_tasks=1, rank=1

)

def forward(self, x):

mean_x = self.mean_module(x)

covar_x = self.covar_module(x)

return gpytorch.distributions.MultitaskMultivariateNormal(mean_x, covar_x)