About 9% of the US population have a diabetes diagnosis. So a binary random classifier that just guesses 50% positive and 50% negative would

- likely be incorrect when it guesses positive (leading to more False Positives (FP) than True Positives (TP); FP > TP), and;

- likely be correct when it guesses negative (leading to more True Negatives (TN) than False Negatives (FN); TN > FN).

Given all this, would the ROC curve of such random classifier still be a diagonal? Even if the data itself is biased toward negative diagnosis?

I'm following along to an Azure AI Fundamentals course which states

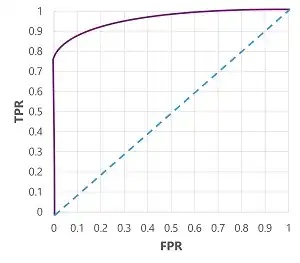

The ROC curve for a perfect model would go straight up the TPR axis on the left and then across the FPR axis at the top. Since the plot area for the curve measures 1x1, the area under this perfect curve would be 1.0 (meaning that the model is correct 100% of the time). In contrast, a diagonal line from the bottom-left to the top-right represents the results that would be achieved by randomly guessing a binary label; producing an area under the curve of 0.5. In other words, given two possible class labels, you could reasonably expect to guess correctly 50% of the time.

The image below is from the same link. The dotted blue line represents ROC of the random classifier.