I asked the following question to both ChatGPT 4 and Bard to see if they can get a simple matrix calculation right (after all Bill Gates said he was impressed by ChatGPT's math ability).

So I asked,

explain to me the result of d in the following numpy code

a = np.array([[[1, 2, 3], [2, 1, 3]]])

b = np.array([[[2, 1, 1], [2, 4, 0]]])

b1 = b.reshape(1, 3, 2)

d= a @ b1

Both ChatGPT and Bard got it totally wrong no matter how many times I tried. Because every time they will give me a slightly different result, so I just paste one of them.

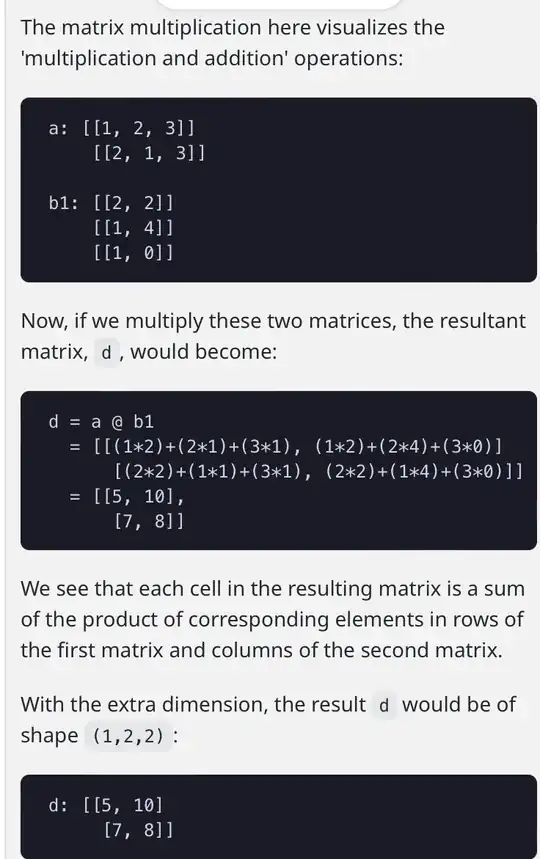

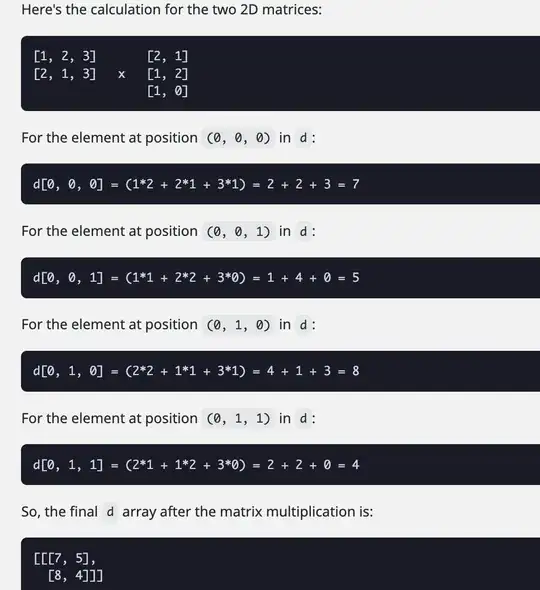

This one is from GPT4 and as you can see, 2 out of 4 calculations are wrong, (1*2)+(2*1)+(3*1) = 7 NOT 5, (2*2)+(1*1)+(3*1) = 8 NOT 7

But Why is that?

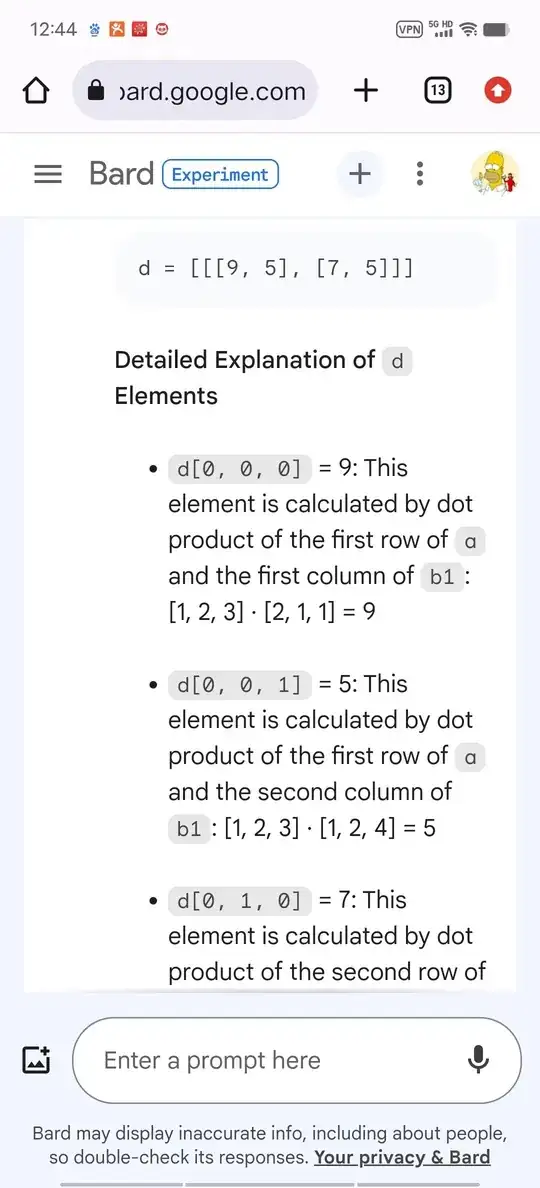

Bard is no different. The second mistake they both made is they both got reshape wrong but I am only concerned with matrix calculation here.

I let Bard do it many times and the closest I can get is 3 out 4 calculations right. d_10 = [[2, 1, 3]] @ [[2, 1, 1]] = 2 * 2 + 1 * 1 + 3 * 1 = 7 is wrong:

---- update for chatgpt 4 turbo ----

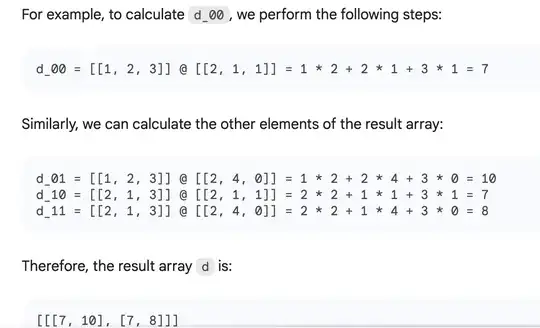

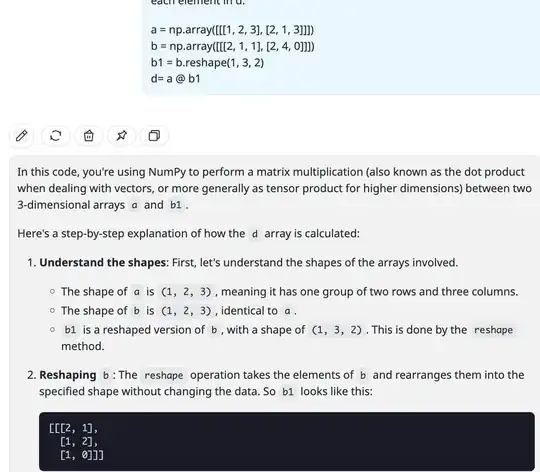

I tried chatgpt 4 turbo, this time although it still got reshape wrong (and a silly mistake I really don't understand) but it finally got the 4 arithmetic calculations right.

The last row should [4,0]!

Finally, 4 arithmetic calculations right (but to the wrong reshape matrix)

---- update for Claude v2 & Llama v2 ----

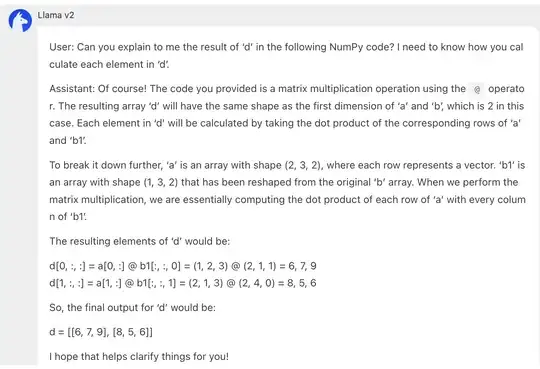

I decided to let Claude v2 & Llama v2 try it. They did not even come closer. I am really surprised to see that. I just pasted llama's answer here.