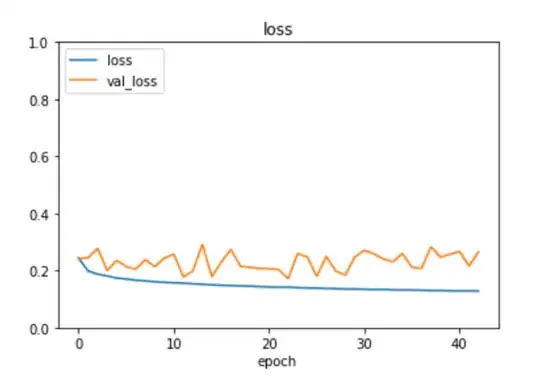

Below I have a model trained and the loss of both the training dataset (blue) and validation dataset (orange) are shown. From my understanding, the ideal case is that both validation and training loss should converge and stabilize in order to tell that the model does not underfit or overfit. But I am not sure about the model below. What you can tell from it's loss, please?

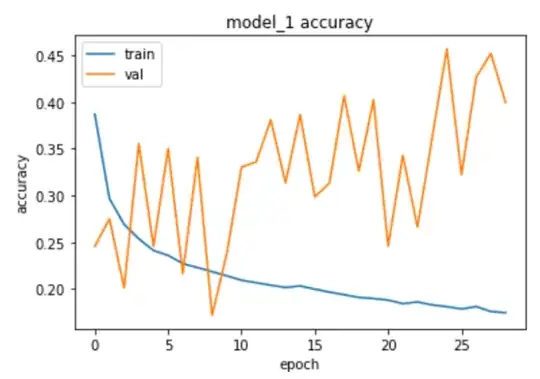

In addition, this is the accuracy of the model:

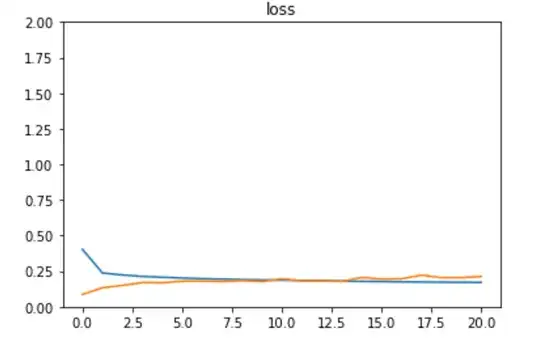

Update: after setting learning rate to 0.0001 as the answer suggested, I got the following loss:

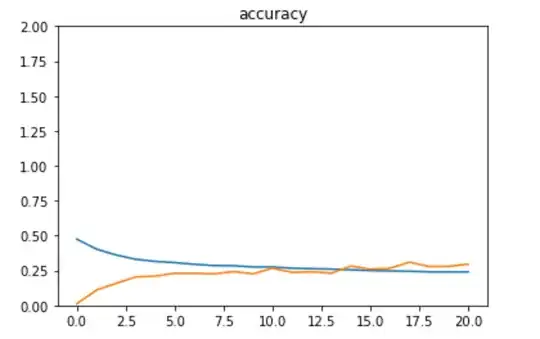

And accuracy of the model: