NOTE: By malleable/non-malleable, I mean the ability/or not to change a byte/block of the ciphertext and have it change only that byte/block of the plaintext.

I understand that we use authentication (via HMAC/UMAC/etc.) to verify integrity. However, where authentication is not possible (perhaps in a case where one has a very limited environment on storage/CPU power), can we transform the plaintext before encryption?

Using several "rounds" of a move-to-front transform that reverses the plaintext after each iteration does exactly that. Once the plaintext is over 1kb (or so), you only need a few rounds. This creates a sort of avalanche effect' so that tampering in any place affects a large amount of the plaintext.

My MTF transform uses an index of possible bytes (0-255). Each "round" consists of an MTF transform, resetting the index, reversal of the plaintext, and another MTF transform.

Running some tests suggests that with two rounds of my MTF for random 256-byte inputs, flipping any bit will, on average, change over 50% of decoded bits. For a 512-byte input, the average is 53%. For a (barely tested) 1024-byte input, the average (and lowest) number of bits changed is over 50% for any flipped bit.

The outliers (minimum number of bits changed) are linear for small enough inputs. For a 512-byte (or less) input, the lowest number of bits changed is always achieved by flipping the first bit (further right) of the first input byte. Additionally flipping any bits at the end of a small input always results in a greater than 50% change, but not at the beginning. These undesirable effects seem to disappear with a 1024-byte input, although testing this takes me too long (about 2 minutes per input!) to make any useful statement.

The main problem with this approach is that it takes too long. I am using a small notebook with Intel Atom CPU Z3735 @ 1.33GHz. One KB takes around 0.034 seconds to encode. To encrypt a KB using ChaCha takes around half this time.

NOTE: The transform function is completely known (per Kerckhoff's principle). It is completely dependent on the plaintext. The function does absolutely nothing except add non-malleability to the plaintext and thus ciphertext. It works before ANY cipher, and not with.

UPDATE: I've updated the transform function, altering the indexes used for the transform rounds, reducing the rounds to 2, and adding a running sum of data input to the process. It is now quicker than ChaCha and achieves non-malleability at 512 bytes of input, without any bias (within limits of my testing). It adds a nonce on encoding that it removes on decoding. The code is here if anyone wants to have a look.

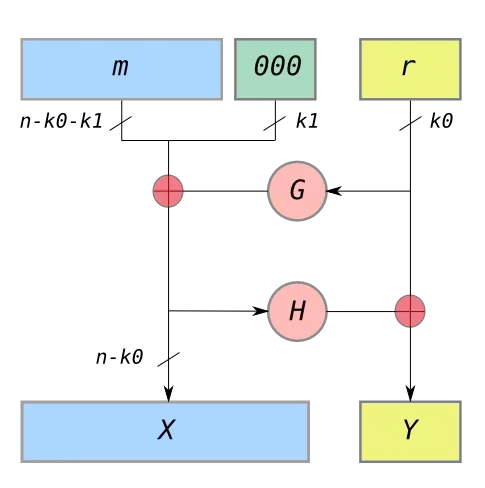

I'm aware I could make the code faster, but I still think there must be some standard approaches to the overall problem. The only modern, similar thing I've found relates to an all-or-nothing transform, which Rivest discusses here. It seems strange that the idea (assuming Rivest is correct about added security) didn't really catch on.

My questions:

- Is there any existing approach that does anything similar to this? Basically some sort of modern version of Russian copulation?

- Does anyone know a potentially quicker way to transform plaintext (completely independent of the cipher) and make it (potentially) non-malleable?