Let $H$ be a collision resistant hash function and $P_c[H](S)$ the collision probability about a sample set $S$ of input elements (eg. random numbers). It increases with double hash? That is,

$P_c[H\circ H](S) \ge P_c[H](S)$ ?

Well... we need a precise $P_c$ definition. Perhaps the problem here is also the choice of a good probability definition (see notes below).

NOTES

Answer's application: the answer is important in the context of checksums used for digital preservation integrity of public files.

Imagine the checksum (the hash digest $H(x)$) of a PDF article of PubMed Central or arxiv.org... And that we need to ensure the integrity, with no "PDF atack" or minimizing it, in nowadays and in a far future, ~20 years.

If the answer is "YES there are a little increase", the recommendation will be "please don't use double-hashing as standard for checksum for PMC or arxivOrg".

General probaility

The general collision probability is defined as

Given $k$ randomly generated $x$ values, where each $x$ is a non-negative integer less than $N$, what is the probability that at least two of them are equal?

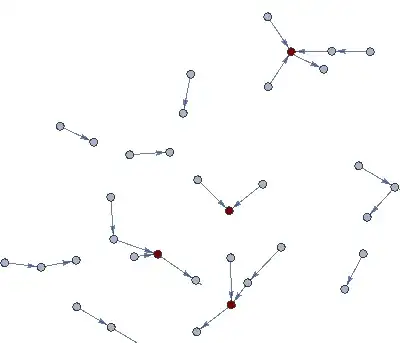

and depends only of the $|S|$, so $P_c[H\circ H] = P_c[H]$...

There are a hypothesis of "perfect hash function", but I want to metric the imperfections.

If there are no error on my interpretations, I need other probability definition.

Counting the collisions

Suppose a kind of "collision tax", $\frac{N_c}{|S|}$, based on the number of collisions $N_c$ occured with a specific sample.

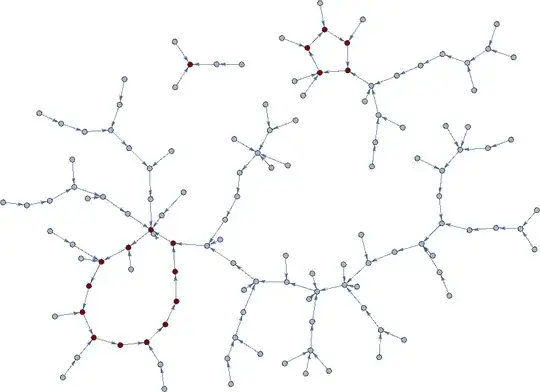

So, for many different sample sets $S_i$ with same number of elements, $k=|S_1|=|S_2|=... =|S_i|$. Suppose a set $K$ of all sets $S_i$ and $P_c[H](K)$ as an average of this collision rate.

This kind of probability seems better to express the problem.

... Bloom filter efficiency

Another metric, instead a probability. Perhaps using Bloom filter theory as reference. A kind of efficiency-benchmark of two Bloom filters, one with $H$ other with $H\circ H$.